Author: Chen Wei, Qianxin Technology

At the WWDC 2023 Developer Conference, Apple announced a series of upgrades to its operating system and products. But the biggest highlight is Apple Vision Pro, Apple's first Mixed Reality (MR, that is, VR+AR) device, which became the focus of global discussions as soon as it was released.

Today marks the beginning of a new era of computing technology. Apple CEO Tim Cook said, "Just as Mac brought us into the era of personal computing, iPhone brought us into the era of mobile computing, Apple Vision Pro will bring us into the era of spatial computing."

1. Spatial computing

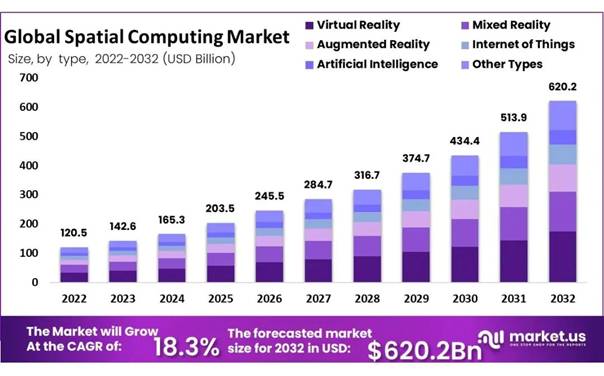

Spatial computing is a term introduced by Simon Greenwold of the Massachusetts Institute of Technology in his 2003 paper. The practical application of spatial computing is based on technological advancements in artificial intelligence (AI), computer vision, chips and sensors. Typical spatial computing includes application scenarios such as automated warehousing, autonomous driving, industrial manufacturing, education and training, and gaming and entertainment.

For instance, in the field of autonomous driving, identifying the surrounding environment, moving objects and people through multi-sensor fusion and multi-modal computing is a typical form of spatial computing. With stronger algorithms and chips, vehicles can challenge more complex terrains and driving scenarios.

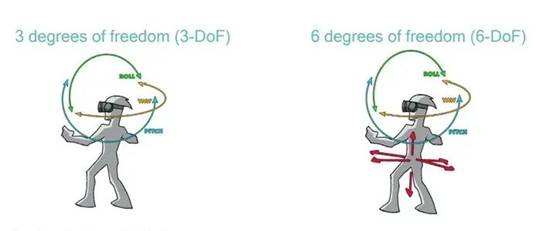

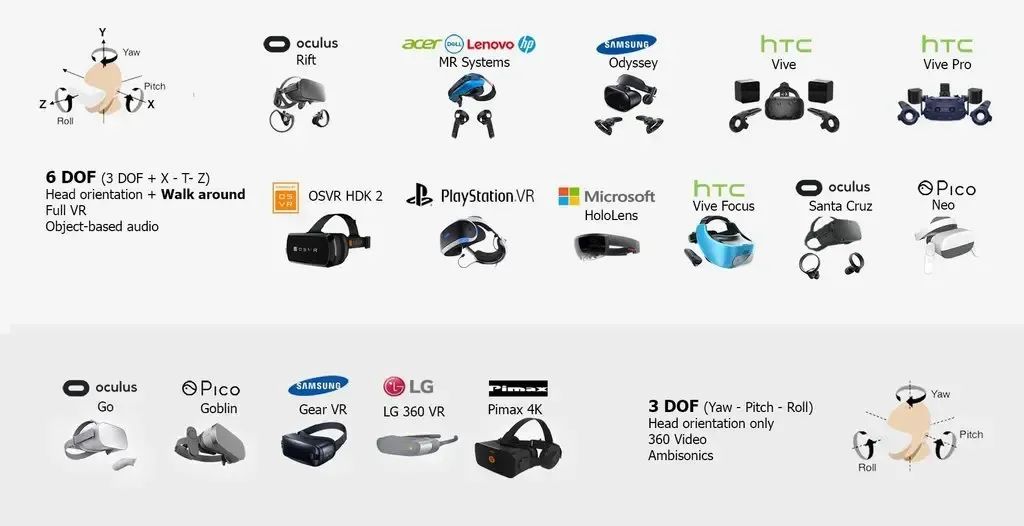

It is claimed that the Apple Vision Pro has a 6-degree of Freedom level. In the virtual scene, the user's perspective can not only rotate in the three dimensions of pitch, yaw and roll, but also the user's body can move on the three mutually perpendicular coordinate axes of front and back, up and down, and left and right.

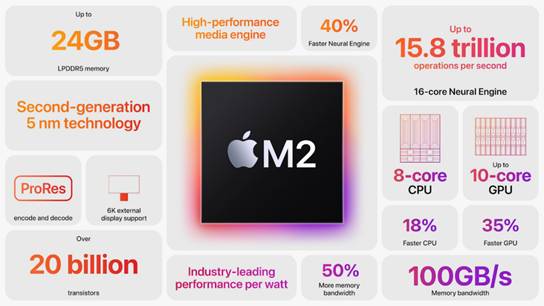

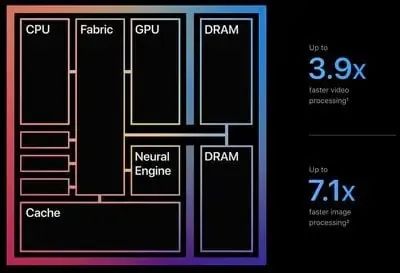

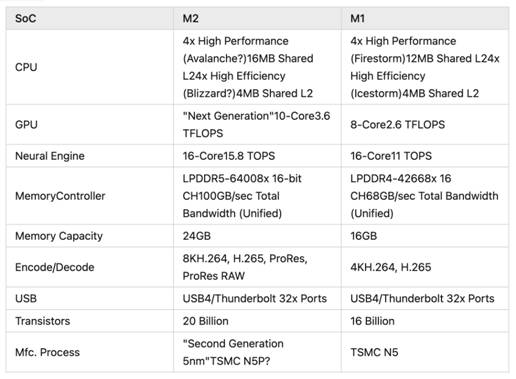

The core chips of Apple Vision Pro include the sensor processing chip R1 and the computing chip M2. In addition to these two core chips, the Apple Vision Pro also features 17 sensors, 6 microphones and two 4K-level Micro leds for display.

According to Apple, the new R1 chip can reduce motion sickness in VR, and its multimodal processing time is only 12 milliseconds. The R1 chip is making its official debut in the Apple product family for the first time, and there is still very little information disclosed so far.

R1 and M2 are the core processors of Apple Vision Pro. (Source: Apple)

The code in iOS 13 has long revealed the existence of this new coprocessor. R is the abbreviation of its development code name "Rose". Of course, we can also guess that R represents Reality. The first iteration version of the Rose coprocessor, R1 (t2006), is somewhat similar to Apple's M series motion coprocessors. It can calculate the position of the device in space, and its core function is to offload a large amount of sensor data processing work from the main system processor.

The special feature of R1 lies in that it is connected to more sensors than previous motion coprocessors and has a stronger processing capacity to generate more accurate device location maps. The R1 processor can currently centrally process multimodal data from gyroscopes, accelerometers, barometers and microphones, and has added multimodal computing support for data from inertial measurement units (IMU), Bluetooth 5.1, ultra-wideband (UWB) and camera sensors (including motion capture and optical tracking). Among them, the Angle of arrival (AoA) and Angle of departure (AoD) functions of Bluetooth 5.1 can realize Bluetooth direction finding.

Considering that the sensors connected to the R1 chip require real-time computing, it can be estimated that R1 is a low-power and low-latency multimodal sensor fusion computing chip. It has customized operator circuits commonly used in sensors and is equipped with an NPU to support AI-based sensor algorithms.

The Apple M2 is Apple's system-on-chip (SoC) and has previously been used in the MacBook Air and MacBook Pro 13.

The M2 can be regarded as the power guzzler in the Vision Pro, occupying the main power consumption.

ICVIEWS Think Tank expert

Dr. Chen Wei

Chairman of Qianxin Technology, an expert in integrated storage and computing /GPU architecture and AI, with a senior professional title. Expert of Zhongguancun Cloud Computing Industry Alliance and Chinese Society of Optical Engineering. He used to be the chief scientist of an AI enterprise and the 3D NAND design director of a major memory chip manufacturer. He led the first 3D NAND flash memory design team in the Chinese mainland, the eFlash IP core compiler, and the RISC-V/x86/arm compatible AI acceleration compiler. His related work was on par with that of Samsung, TSMC, and SST. Graduated from Tsinghua University, with over 70 invention patents and software Copyrights in China and the United States.

免责声明: 本文章转自其它平台,并不代表本站观点及立场。若有侵权或异议,请联系我们删除。谢谢! Disclaimer: This article is reproduced from other platforms and does not represent the views or positions of this website. If there is any infringement or objection, please contact us to delete it. thank you! |